Error, Part 2: Type II and sampling

“How many would you need for a nationally representative sample? Would five be enough?” “Five thousand?” “No, five participants.” There are trials in the life of a statistician, and being asked silly questions about sampling is one of them. An inadequate sample is a great way to make a Type II error, that is, failing to find something that is actually there. Last week’s post dealt with Type I error and Captain Statto of the pirate ship Regressor is here again to explain Type II.

After Avery mistook a seagull for a ship, Captain Statto posted Bluebeard to look-out duty. Bluebeard is known for her keen sight so he hoped she wouldn’t repeat Avery’s mistake. Starting at the stem, she slowly turned 180° starboard all the way to the stern, taking in the seagulls, messages floating in bottles, and very small uninhabited islands, but no other boats. Bluebeard returned to make her report but before she could say, “All clear” the Regressor was struck by a cannonball from the port side. Bluebeard mistakenly failed to reject the null hypothesis (that there were no other ships in the area), committing a Type II error based on a non-representative sample and an incomplete dataset: she only looked on one side of the ship.

Generalisability

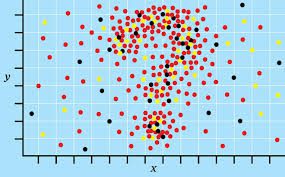

Poor old Bluebeard was trying to extrapolate the status of the entire ocean from her survey of just the starboard side, which made for a biased sample. By concentrating too much on being 100% certain that there were no ships astarboard, she left open the possibility of a catastrophic error on the port side. There is an inverse relationship between α (the probability of Type I error) and β (the probability of Type II error); there are some recommendations as to the ratio, such 4:1 which corresponds to α = .05 and β = .2. A more stringent Type I probability estimate of the order of α < .01 is appropriate in the natural sciences but in most social science research Type II error was of greater concern. There are several ways of dealing with the bias that results from sampling and of reducing Type II error.

A previous post looked at the relationship between qualitative and quantitative methods. There’s one point on which they differ vastly: how many people is enough people. For tests of difference, there’s a (long and complicated) formula that uses the power of the test, the expected effect size, and the Type I error probability to calculate the sample size. Luckily, there are tables in stats books and interweb sites that give the minimum sample size based on these three criteria. Depending on the number of independent variables and number of levels of each in a design, the required cell size is a fraction of the total sample; it’s half for one variable with two levels like gender, a quarter for two variables with two levels, like gender and an age variable that just distinguishes children and adults. If the achieved sample is smaller than the target sample, which it almost always is, combining age groups or leaving out a gender comparison can help.

That’s just so random!

Another post mentioned the relationship between sample size and margin of error; margin of error means the same thing the standard error of a statistic. The bigger the sample the smaller the standard error, and the smaller the standard error the lower the risk of Type I error. A larger random sample also reduces the risk of Type II error. An alternative to random samples is matched samples whereby individual members in two or more group are matched on demographic characteristics, for example, to control for their effect as extraneous variables. This is common in clinical research where a treatment group is matched to a control group on things like age, gender, marital status, and socio-economic status.

Separately, there are lots of examples of rules of thumb for calculating the minimum sample sizes for particular statistical tests. To establish the psychometric properties of a scale, five participants for each item are recommended. The minimum sample size recommended for Principal Components Analysis is 300. These principles over-ride the rule on tests of difference described earlier, but the generalist principle of all is that there’s no such thing as too many data.

The best way to avoid Type II error is to find an adequate sample for the statistical test you propose. Generally, the more complex the design the larger the sample size, and for certain more complex stats it’s larger still. However, there’s also a balance to be struck between getting enough information to be certain and getting enough information to actually finish the bloomin’ report. Get the balance right, and it’s plain sailing. Get it wrong, and you’re sunk.

Sign up to receive our weekly job alert

Featured Jobs

The Competition & Markets Authority

Belfast, Cardiff, Edinburgh, London or Manchester

April 24, 2024

The Competition & Markets Authority

Belfast, Cardiff, Edinburgh, London or Manchester

April 24, 2024

Armagh Observatory and Planetarium

Armagh, Northern Ireland

May 17, 2024